45 confident learning estimating uncertainty in dataset labels

Generalisation effects of predictive uncertainty ... Uncertainty estimation is an important topic in deep learning research that holds potential in providing more calibrated predictions and increasing the robustness of NNs. Estimating Uncertainty in Machine Learning Models — Part 3 ... Check out part 1 ()and part 2 of this seriesAuthor: Dhruv Nair, data scientist, Comet.ml. In the last part of our series on uncertainty estimation, we addressed the limitations of approaches like bootstrapping for large models, and demonstrated how we might estimate uncertainty in the predictions of a neural network using MC Dropout.. So far, the approaches we looked at involved creating ...

[R] Announcing Confident Learning: Finding and Learning ... Confident learning (CL) has emerged as an approach for characterizing, identifying, and learning with noisy labels in datasets, based on the principles of pruning noisy data, counting to estimate noise, and ranking examples to train with confidence.

Confident learning estimating uncertainty in dataset labels

Find label issues with confident learning for NLP Estimate noisy labels We use the Python package cleanlab which leverages confident learning to find label errors in datasets and for learning with noisy labels. Its called cleanlab because it CLEAN s LAB els. cleanlab is: fast - Single-shot, non-iterative, parallelized algorithms Rectifying Pseudo Label Learning via Uncertainty ... To overcome the problem, this paper proposes to explicitly estimate the prediction uncertainty during training to rectify the pseudo label learning for unsupervised semantic segmentation adaptation. Given the input image, the model outputs the semantic segmentation prediction as well as the uncertainty of the prediction. Prediction with machine learning Code (23) Discussion (5) Metadata. Machine Learning for AF Risk Prediction to target AF screening. Apr 06, 2020 · Next step is to transform the dataset into the data frame. Feb 17, 2021 · A prediction from a machine learning perspective is a single point that hides the uncertainty of that prediction.

Confident learning estimating uncertainty in dataset labels. Characterizing Label Errors: Confident Learning for Noisy ... 2.2 The Confident Learning Module. Based on the assumption of Angluin , CL can identify the label errors in the datasets and improve the training with noisy labels by estimating the joint distribution between the noisy (observed) labels \(\tilde{y}\) and the true (latent) labels \({y^*}\). Remarkably, no hyper-parameters and few extra ... Confident Learning: Estimating Uncertainty in Dataset Labels Confident learning (CL) has emerged as an approach for characterizing, identifying, and learning with noisy labels in datasets, based on the principles of pruning noisy data, counting to estimate noise, and ranking examples to train with confidence. Confident Learning: Estimating Uncertainty in Dataset Labels Confident learning (CL) is an alternative approach which focuses instead on label quality by characterizing and identifying label errors in datasets, based on principles of pruning noisy data, counting with probabilistic thresholds to estimate noise, and ranking examples to train with confidence. An Introduction to Confident Learning: Finding and ... I recommend mapping the labels to 0, 1, 2. Then after training, when you predict, you can type classifier.predict_proba () and it will give you the probabilities for each class. So an example with 50% probability of class label 1 and 50% probability of class label 2, would give you output [0, 0.5, 0.5]. Chanchana Sornsoontorn • 2 years ago

Confident Learning: : Confident Learning: Estimating Uncertainty in Dataset Labels theCIFARdataset. TheresultspresentedarereproduciblewiththeimplementationofCL algorithms,open-sourcedasthecleanlab1Pythonpackage. Thesecontributionsarepresentedbeginningwiththeformalproblemspecificationand notation(Section2),thendefiningthealgorithmicmethodsemployedforCL(Section3) Confident Learning学习笔记 论文地址:Confident Learning: Estimating Uncertainty in Dataset Labels Curtis 论文解决的问题. 目的:处理标注噪声问题. 方法: 针对噪声数据,过去的工作被称为 model-centric ,即修改loss函数或者修改模型,而文中的工作称为 data-centric。 My favorite Machine Learning Papers in 2019 | by Akihiro ... Unsupervised representation learning, MoCo in which performs like metric learning with a dictionary. the key network gradually updating to be closer to query network.It can improve the accuracy of... Confident Learning: Estimating Uncertainty in Dataset Labels Confident learning (CL) is an alternative approach which focuses instead on label quality by characterizing and identifying label errors in datasets, based on the principles of pruning noisy data,...

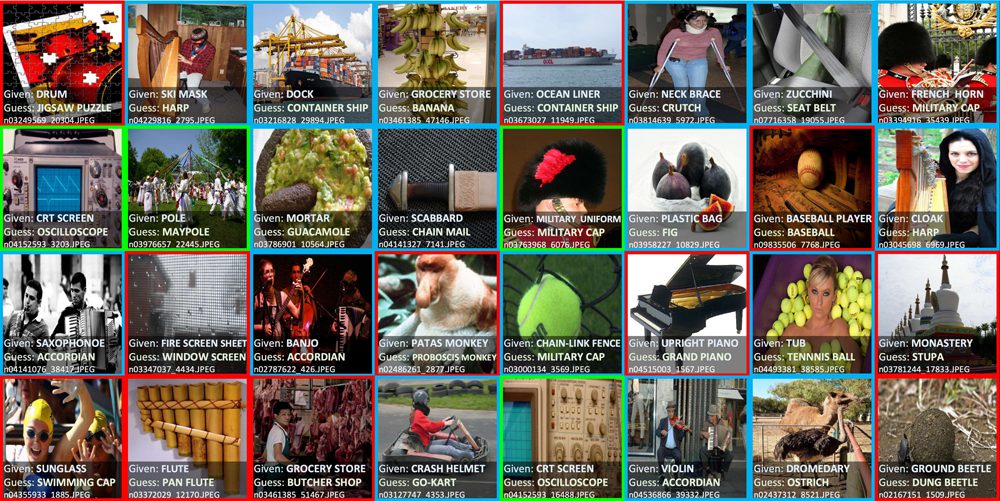

Confident Learning: Estimating Uncertainty in Dataset Labels Confident Learning: Estimating Uncertainty in Dataset Labels. 摘要. Learning exists in the context of data, yet notions of \emph {confidence} typically focus on model predictions, not label quality. Confident learning (CL) is an alternative approach which focuses instead on label quality by characterizing and identifying label errors in ... Confident Learning: Estimating Uncertainty in Dataset Labels Confident learning (CL) is an alternative approach which focuses instead on label quality by characterizing and identifying label errors in datasets, based on the principles of pruning noisy data, counting with probabilistic thresholds to estimate noise, and ranking examples to train with confidence. Confident Learning -そのラベルは正しいか?- - 学習する天然ニューラルネット これは何? ICML2020に投稿された Confident Learning: Estimating Uncertainty in Dataset Labels という論文が非常に面白かったので、その論文まとめを公開する。 論文 [1911.00068] Confident Learning: Estimating Uncertainty in Dataset Labels 超概要 データセットにラベルが間違ったものがある(noisy label)。そういうサンプルを検出 ... cleanlab · PyPI Comparison of confident learning (CL), as implemented in cleanlab, versus seven recent methods for learning with noisy labels in CIFAR-10. Highlighted cells show CL robustness to sparsity. The five CL methods estimate label issues, remove them, then train on the cleaned data using Co-Teaching.

Confident Learning: Estimating Uncertainty in Dataset Labels by CG Northcutt · 2021 · Cited by 172 — Confident learning (CL) is an alternative approach which focuses instead on label quality by characterizing and identifying label errors in datasets, based on ...Journal reference: Journal of Artificial Intellige...Cite as: arXiv:1911.00068

Data Noise and Label Noise in Machine Learning | by Till ... Some defence strategies, particularly for noisy labels, are described in brief. There are several more techniques to discover and to develop. Uncertainty Estimation This is not really a defense itself, but uncertainty estimation yields valuable insights in the data samples.

Chipbrain Research | ChipBrain | Boston Confident Learning: Estimating Uncertainty in Dataset Labels By Curtis Northcutt, Lu Jiang, Isaac Chuang. Learning exists in the context of data, yet notions of confidence typically focus on model predictions, not label quality. Confident learning (CL) is an alternative approach which focuses instead on label quality by characterizing and ...

Tag Page | L7 An Introduction to Confident Learning: Finding and Learning with Label Errors in Datasets. This post overviews the paper Confident Learning: Estimating Uncertainty in Dataset Labels authored by Curtis G. Northcutt, Lu Jiang, and Isaac L. Chuang. machine-learning confident-learning noisy-labels deep-learning.

Post a Comment for "45 confident learning estimating uncertainty in dataset labels"